Introduction

The question of whether ChatGPT can watch and summarize videos is a common inquiry from professionals, students, and content consumers seeking to process information efficiently. At its core, ChatGPT is a text-based language model. It cannot perceive visual or auditory data directly. It operates exclusively on textual input. However, the process of video summarization is achievable through a structured workflow that combines external tools with the model’s analytical capabilities. This article examines the technical realities, outlines practical methodologies, and evaluates the benefits and limitations of using ChatGPT for generating video summaries.

Table of Contents

Understanding ChatGPT’s Core Capabilities and Limitations

To address the central query, we must first clarify what ChatGPT can and cannot do. ChatGPT is designed to understand, generate, and manipulate text. It has no inherent ability to access the internet, view image pixels, or decode audio waveforms. Its knowledge is derived from a vast dataset of text and code, and it interacts solely through a text-based interface. Therefore, the model cannot “watch” a video in the human sense. It cannot independently extract visual scenes, identify speakers, or transcribe dialogue from a video file or stream.

The model’s strength lies in its deep comprehension of language. When provided with a complete and accurate text transcript of a video’s audio track, ChatGPT can perform exceptionally sophisticated analysis. It can identify key themes, condense arguments, extract action items, and rephrase complex explanations into digestible points. This distinction between direct perception and textual analysis is fundamental. The challenge, then, shifts from asking if ChatGPT can watch videos to determining how to procure the textual data it requires to function.

The Essential Role of Transcription and Description Tools

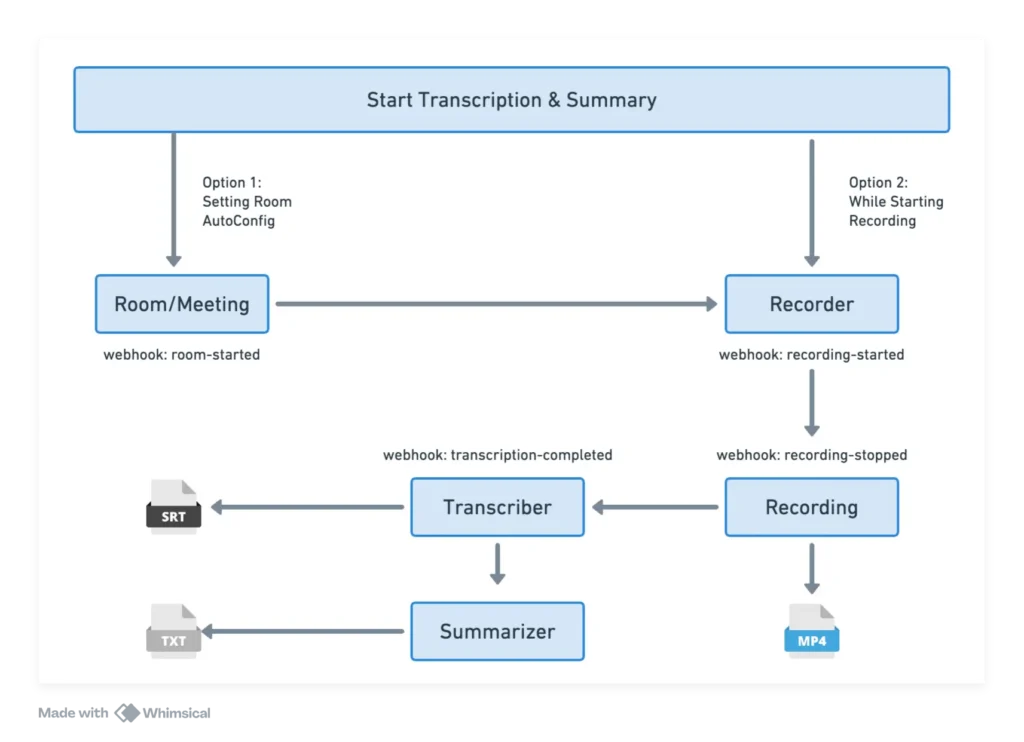

Since ChatGPT requires text, the summarization pipeline depends entirely on preparatory tools. These tools act as intermediaries, converting the multimodal content of a video into a format the language model can process. Two primary types of data are crucial: accurate transcripts and detailed visual descriptions.

Automated transcription services are the most common entry point. Numerous software applications and online platforms can generate text from a video’s audio with high accuracy. These services use automatic speech recognition technology. For videos with clear dialogue and minimal background noise, the resulting transcript can be remarkably reliable. For content with technical jargon, multiple speakers, or poor audio quality, manual review and correction of the transcript may be necessary to ensure summary accuracy.

For videos where visual information is critical—such as tutorials, documentaries, or presentations with slides—the transcript alone is insufficient. A narrator might say, “as you can see here,” while demonstrating a procedure. In these cases, supplementary visual description is required. This can be achieved through human annotation or, increasingly, through computer vision models that can generate descriptive captions for scenes, charts, and on-screen text. Integrating this descriptive text with the transcript creates a comprehensive textual representation of the video’s content.

Practical Methods for Video Summarization with ChatGPT

Implementing an effective summarization process involves a sequence of steps. The first step is content preparation. Select a video and obtain its transcript using a reliable service. If the video is platform-based, some sites offer built-in transcription features. For local files, dedicated transcription software is necessary. The output must be a clean, continuous text file, ideally with speaker identification if relevant.

The second step is context provision. When submitting the transcript to ChatGPT, the initial prompt must establish clear instructions. Simply pasting raw text may yield a generic summary. Instead, specify the desired output format. For example, you might request a structured summary divided into sections like “Main Thesis,” “Key Arguments,” “Supporting Evidence,” and “Conclusions.” You can also instruct the model to focus on specific aspects, such as technical steps, debated points, or product features mentioned.

The third step involves iterative refinement. The initial summary can be used as a foundation for deeper inquiry. Follow-up prompts can ask ChatGPT to elaborate on a particular point, create a bullet-point list for a presentation, or draft an email summarizing the video’s content for a colleague. This interactive capability allows users to tailor the output to their precise needs, moving beyond a simple abstract to actionable intelligence.

Analyzing the Benefits of This Summarization Approach

Adopting this two-stage methodology—external processing followed by linguistic analysis—offers significant advantages. The most prominent benefit is time efficiency. Viewing a one-hour lecture or meeting recording can consume a full hour. A well-crafted summary can convey the essential information in a few minutes of reading. This enables rapid skimming of multiple video sources to determine which warrant a full viewing.

Enhanced comprehension and retention represent another key advantage. ChatGPT can synthesize dense or rambling content into a clear, logical structure. It can define terms, connect concepts scattered throughout a discussion, and highlight contradictions or consensus points. This analytical processing aids in deeper understanding and memory retention, especially for complex educational or technical material.

The approach also improves accessibility. For individuals who process written information more effectively than auditory content, or for those in sound-sensitive environments, a text summary provides a critical alternative. Furthermore, summaries create searchable, referenceable documentation. Important details from a video meeting or a conference talk can be easily located within a text document, unlike being embedded in a time-coded video file.

Addressing Key Challenges and Accuracy Concerns

Despite its utility, this process is not without challenges. Accuracy is the paramount concern. The quality of the summary is inherently dependent on the quality of the input transcript. Transcription errors, especially with specialized terminology or accents, can lead to factual inaccuracies in the summary. The language model, operating on flawed data, will propagate those errors. There is no inherent fact-checking mechanism against the original video.

Context loss is another significant limitation. A transcript, even with visual descriptions, cannot fully capture tone, sarcasm, emotional emphasis, or nonverbal cues. A speaker’s ironic comment might be summarized as a straightforward statement, altering the intended meaning. Similarly, the model may struggle with highly nuanced or abstract content where meaning is dependent on cultural or subjective interpretation.

Practical constraints also exist. Processing very long videos, such as multi-hour seminars, can hit context window limits of language models. This requires segmenting the transcript, which can disrupt narrative flow. Additionally, the entire workflow—transcription, description, prompting—requires time and effort. For short videos, this overhead may negate the efficiency benefits. The cost of premium transcription or description services must also be factored into regular use.

Advanced Applications and Integrative Workflows

Beyond basic summarization, this technique enables more advanced applications. Content repurposing is a powerful use case. A single video lecture can be transformed into a blog post outline, a series of social media updates, study notes with key questions, or a structured report. ChatGPT can adapt the core content for different formats and audiences based on prompt engineering.

Research and analysis workflows are also enhanced. Analysts can process multiple video sources on a trending topic, such as quarterly earnings calls or product launches. By feeding transcripts from several companies into the model, they can request a comparative analysis identifying common strategies, differing viewpoints, and emerging industry trends. This scalable analysis would be prohibitively time-consuming through manual viewing.

Integration with other software tools creates automated pipelines. Platforms exist that connect video hosting services, automated transcription engines, and language model APIs. These systems can be configured to automatically generate and publish a summary whenever a new video is uploaded to a specific channel. This is particularly valuable for organizations producing regular webinar series or training content, ensuring immediate availability of textual references.

Best Practices for Optimal Results

To maximize the effectiveness of video summarization, adhere to several best practices. Begin with source quality. Prioritize videos with clear audio and, if possible, use human-edited transcripts for mission-critical applications. The investment in accurate input data pays dividends in output reliability.

Master the art of prompt engineering. Move beyond “summarize this.” Provide explicit directives: “Summarize the technical tutorial in five steps for a beginner audience,” or “Identify the three main hypotheses proposed in the lecture and the evidence presented for each.” Specify length constraints, such as “in 250 words” or “in three bullet points.” The more precise the instruction, the more targeted the result.

Always implement a human review loop. Treat the ChatGPT-generated summary as a powerful first draft. A subject matter expert should review it against the original video or transcript to correct subtle errors, reinstate crucial nuance, or adjust emphasis. This human-in-the-loop approach combines computational efficiency with expert judgment, creating a final product that is both efficient and trustworthy.

Future Developments in Automated Video Analysis

The current landscape of video analysis is rapidly evolving. We are moving toward more tightly integrated systems where transcription, visual analysis, and summarization occur within a unified framework. Future iterations of language models may be pre-trained on multimodal datasets, allowing them to interpret transcripts and visual scene descriptions natively within a single processing step.

Improvements in automatic speech recognition and computer vision will continuously enhance the quality of the textual data fed to models. This will reduce the need for manual correction and expand the range of video types that can be summarized reliably, including those with complex visual data like scientific visualizations or dynamic presentations.

Furthermore, we can anticipate more specialized models fine-tuned for specific video genres. A model optimized for summarizing academic lectures will differ from one designed for corporate training videos or news broadcasts. These specialized systems will better understand genre-specific structures and terminologies, yielding summaries with greater depth and relevance for professional users.

Conclusion

Can ChatGPT watch and summarize videos? Not directly. However, when equipped with a accurate textual representation of a video’s audio and visual content, its summarization capabilities are profound. The effective process involves a structured pipeline: obtaining a high-quality transcript, providing detailed visual context when needed, and guiding the model with specific, well-crafted prompts. While challenges regarding accuracy and context loss demand a careful, human-supervised approach, the benefits for productivity, comprehension, and content accessibility are substantial. As supporting technologies advance, the synergy between automated transcription services and advanced language models will make video summarization an increasingly seamless and powerful tool for information management.